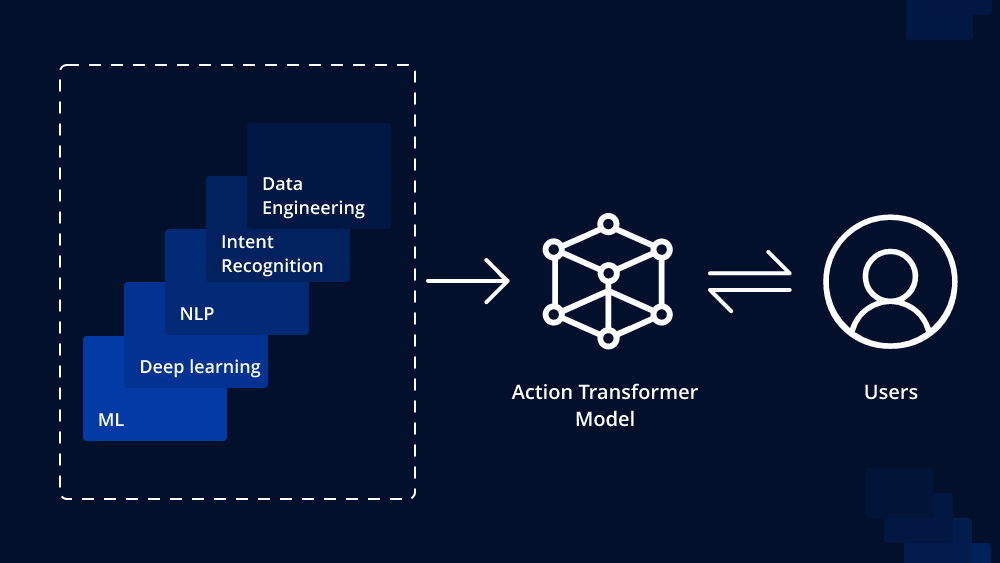

In the ever-evolving landscape of natural language processing (NLP), the Action Transformer Model has emerged as a groundbreaking innovation. With the capacity to comprehend and generate human-like text, this model is poised to redefine how we interact with machines and automate various tasks. In this article, we delve into the world of Action Transformer Models, exploring their architecture, applications, and the potential they hold for the future.

Understanding the Action Transformer Model

The Action Transformer Model builds upon the foundation of the traditional Transformer architecture, which has already demonstrated its prowess in various NLP tasks. It was first introduced in the research paper “Action Transformer: A Robust Transformer for Reinforcement Learning,” which was presented at NeurIPS 2019.

At its core, the Action Transformer Model is designed to bridge the gap between understanding and generation in NLP. It combines the ability to comprehend the context and generate coherent responses or actions, making it an ideal candidate for applications requiring both comprehension and generation capabilities.

The Action Transformer Model comprises several key components, including self-attention mechanisms, feed-forward networks, and positional encodings. These elements work in harmony to process and generate sequences of text, enabling the model to understand the context and generate relevant responses.

Applications of Action Transformer Models

The versatility of Action Transformer Models is evident in their numerous applications across various domains. Let’s explore some of the most exciting use cases:

1. Chatbots and Virtual Assistants

One of the most prominent applications of Action Transformer Models is in chatbots and virtual assistants. These models can understand user queries and generate coherent responses, making them invaluable for customer support, information retrieval, and even personal assistants.

2. Content Generation

Content generation, including text summarization, article writing, and creative writing, benefits immensely from Action Transformer Models. These models can synthesize information from various sources and generate human-like text with remarkable coherence.

3. Language Translation

In the field of machine translation, Action Transformer Models have proven to be a game-changer. They can comprehend the nuances of language and produce translations that capture the subtleties and context of the source text.

4. Reinforcement Learning

The original paper on Action Transformer Models highlighted their utility in reinforcement learning. These models can be used to control agents in environments, making them ideal for tasks that require understanding and generating actions based on the observed context.

5. Knowledge Graph Completion

Action Transformer Models also find applications in completing knowledge graphs. They can infer missing information from existing data, contributing to the development of more comprehensive knowledge bases.

6. Content Recommendation

Content recommendation systems utilize these models to understand user preferences and generate personalized recommendations for movies, books, music, and other forms of content.

The Future of Action Transformer Models

The horizon for Action Transformer Models is filled with promise and potential. As research in NLP continues to advance, we can expect to see further developments in these models, leading to enhanced performance and broader applications.

1. Improved Comprehension

Future iterations of Action Transformer Models are likely to improve their comprehension capabilities. This may involve better understanding of context, nuances, and even emotions, allowing for more context-aware responses.

2. Multimodal Integration

The integration of text with other forms of data, such as images and videos, is a fascinating area of research for Action Transformer Models. Combining text comprehension with the ability to generate text that complements visual data can open up new avenues in areas like image captioning and video analysis.

3. Real-time Applications

Real-time applications, such as simultaneous translation during live events or instant response in customer service, stand to benefit from the speed and accuracy of Action Transformer Models.

4. Ethical Considerations

As these models become increasingly sophisticated, ethical considerations will take center stage. Issues like bias, privacy, and misuse must be addressed to ensure the responsible development and deployment of Action Transformer Models.

Challenges Ahead

Despite the remarkable capabilities of Action Transformer Models, they are not without challenges. One major hurdle is the enormous computational resources required to train and fine-tune these models. Additionally, addressing biases in the training data and model output is a complex issue that demands ongoing research and vigilance.

Conclusion

The advent of Action Transformer Models represents a monumental leap forward in the field of natural language processing. With their unique ability to understand and generate text, these models are poised to revolutionize industries, from customer service to content generation and beyond. As research continues to push the boundaries of what these models can achieve, the future looks promising, but it also presents ethical and practical challenges that must be met with responsible development and deployment. In the years to come, we can expect Action Transformer Models to play a pivotal role in how we interact with technology and automate tasks, making them a key player in the ever-evolving world of NLP.